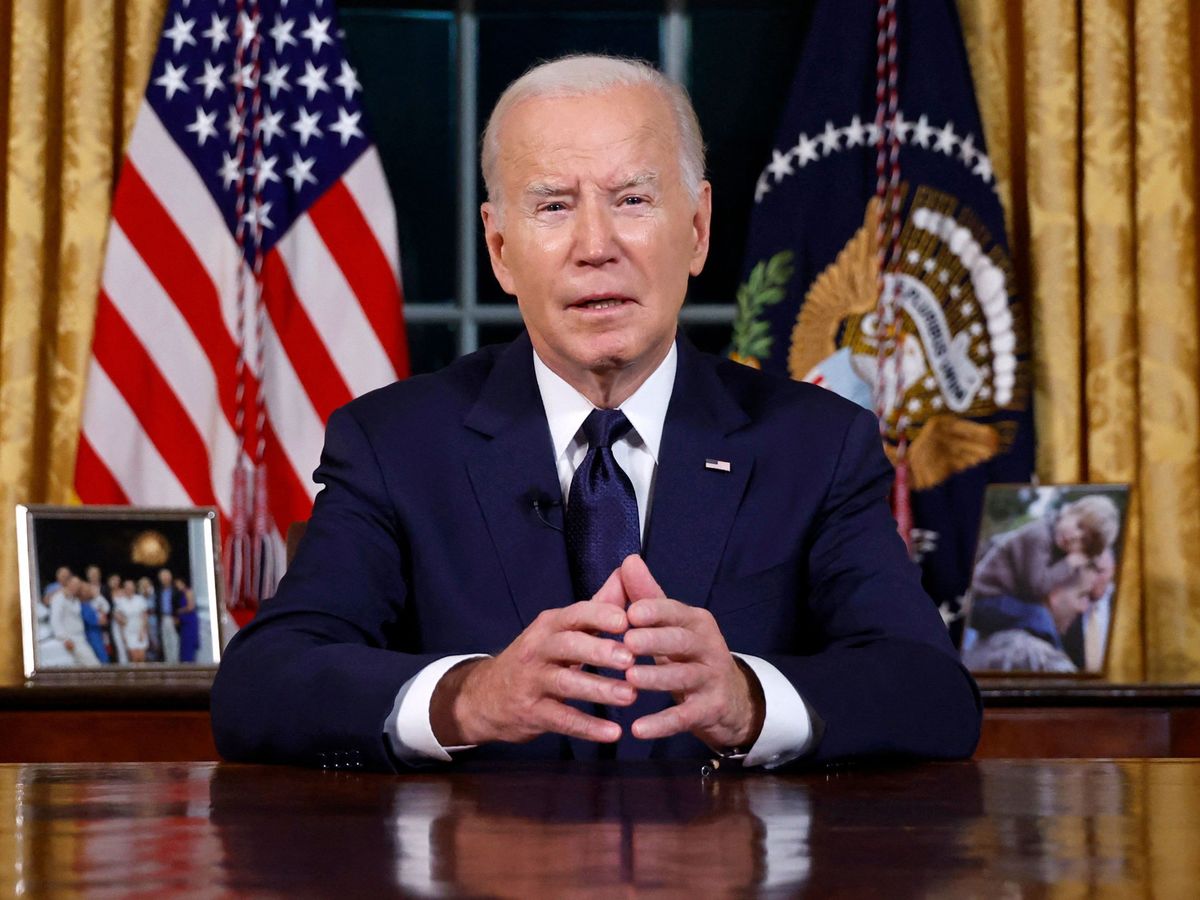

The world has been waiting for the United States to get its act together on regulating artificial intelligence—particularly since it’s home to many of the powerful companies pushing at the boundaries of what’s acceptable. Today, U.S. president Joe Biden issued an executive order on AI that many experts say is a significant step forward.

“I think the White House has done a really good, really comprehensive job,” says Lee Tiedrich, who studies AI policy as a distinguished faculty fellow at Duke University’s Initiative for Science & Society. She says it’s a “creative” package of initiatives that works within the reach of the government’s executive branch, acknowledging that it can neither enact legislation (that’s Congress’s job) nor directly set rules (that’s what the federal agencies do). Says Tiedrich: “They used an interesting combination of techniques to put something together that I’m personally optimistic will move the dial in the right direction.”

This U.S. action builds on earlier moves by the White House: a “Blueprint for an AI Bill of Rights“ that laid out nonbinding principles for AI regulation in October 2022, and voluntary commitments on managing AI risks from 15 leading AI companies in July and September.

And it comes in the context of major regulatory efforts around the world. The European Union is currently finalizing its AI Act, and is expected to adopt the legislation this year or early next; that act bans certain AI applications deemed to have unacceptable risks and establishes oversight for high-risk applications. Meanwhile, China has rapidly drafted and adopted several laws on AI recommender systems and generative AI. Other efforts are underway in countries such as Canada, Brazil, and Japan.

What’s in the executive order on AI?

The executive order tackles a lot. The White House has so far released only a fact sheet about the order, with the final text to come soon. That fact sheet starts with initiatives related to safety and security, such as a provision that the National Institute of Standards and Technology (NIST) will come up with “rigorous standards for extensive red-team testing to ensure safety before public release.” Another states that companies must notify the government if they’re training a foundation model that could pose serious risks and share results of red-team testing.

The order also discusses civil rights, stating that the federal government must establish guidelines and training to prevent algorithmic bias—the phenomenon in which the use of AI tools in decision-making systems exacerbates discrimination. Brown University computer science professor Suresh Venkatasubramanian, who coauthored the 2022 Blueprint for an AI Bill of Rights, calls the executive order “a strong effort” and says it builds on the Blueprint, which framed AI governance as a civil rights issue. However, he’s eager to see the final text of the order. “While there are good steps forward in getting info on law-enforcement use of AI, I’m hoping there will be stronger regulation of its use in the details of the [executive order],” he tells IEEE Spectrum. “This seems like a potential gap.”

Another expert waiting for details is Cynthia Rudin, a Duke University professor of computer science who works on interpretable and transparent AI systems. She’s concerned about AI technology that makes use of biometric data, such as facial-recognition systems. While she calls the order “big and bold,” she says it’s not clear whether the provisions that mention privacy apply to biometrics. “I wish they had mentioned biometric technologies explicitly so I knew where they fit or whether they were included,” Rudin says.

While the privacy provisions do include some directives for federal agencies to strengthen their privacy requirements and support privacy-preserving AI training techniques, they also include a call for action from Congress. President Biden “calls on Congress to pass bipartisan data privacy legislation to protect all Americans, especially kids,” the order states. Whether such legislation would be part of the AI-related legislation that Senator Chuck Schumer is working on remains to be seen.

Coming soon: Watermarks for synthetic media?

Another hot-button topic in these days of generative AI that can produce realistic text, images, and audio on demand is how to help people understand what’s real and what’s synthetic media. The order instructs the U.S. Department of Commerce to “develop guidance for content authentication and watermarking to clearly label AI-generated content.” Which sounds great. But Rudin notes that while there’s been considerable research on how to watermark deepfake images and videos, it’s not clear “how one could do watermarking on deepfakes that involve text.” She’s skeptical that watermarking will have much effect, but says that if other provisions of the order force social-media companies to reveal the effects of their recommender algorithms and the extent of disinformation circulating on their platforms, that could cause enough outrage to force a change.

Susan Ariel Aaronson, a professor of international affairs at George Washington University who works on data and AI governance, calls the order “a great start.” However, she worries that the order doesn’t go far enough in setting governance rules for the data sets that AI companies use to train their systems. She’s also looking for a more defined approach to governing AI, saying that the current situation is “a patchwork of principles, rules, and standards that are not well understood or sourced.” She hopes that the government will “continue its efforts to find common ground on these many initiatives as we await congressional action.”

While some congressional hearings on AI have focused on the possibility of creating a new federal AI regulatory agency, today’s executive order suggests a different tack. Duke’s Tiedrich says she likes this approach of spreading out responsibility for AI governance among many federal agencies, tasking each with overseeing AI in their areas of expertise. The definitions of “safe” and “responsible” AI will be different from application to application, she says. “For example, when you define safety for an autonomous vehicle, you’re going to come up with different set of parameters than you would when you’re talking about letting an AI-enabled medical device into a clinical setting, or using an AI tool in the judicial system where it could deny people’s rights.”

The order comes just a few days before the United Kingdom’s AI Safety Summit, a major international gathering of government officials and AI executives to discuss AI risks relating to misuse and loss of control. U.S. vice president Kamala Harris will represent the United States at the summit, and she’ll be making one point loud and clear: After a bit of a wait, the United States is showing up.

Eliza Strickland is a senior editor at IEEE Spectrum, where she covers AI, biomedical engineering, and other topics. She holds a master’s degree in journalism from Columbia University.